Te-Lin Wu (吳德霖)

Google Scholar / LinkedIn / Github

I am one of the early founding members in the AI domain of a stealth video AI startup.

Previously, I was a researcher at Character.ai working on large language models (LLMs) and model post-training for smarter and more amusing chatbots.

Prior to that, I obtained my PhD at UCLA PlusLab advised by Nanyun (Violet) Peng,

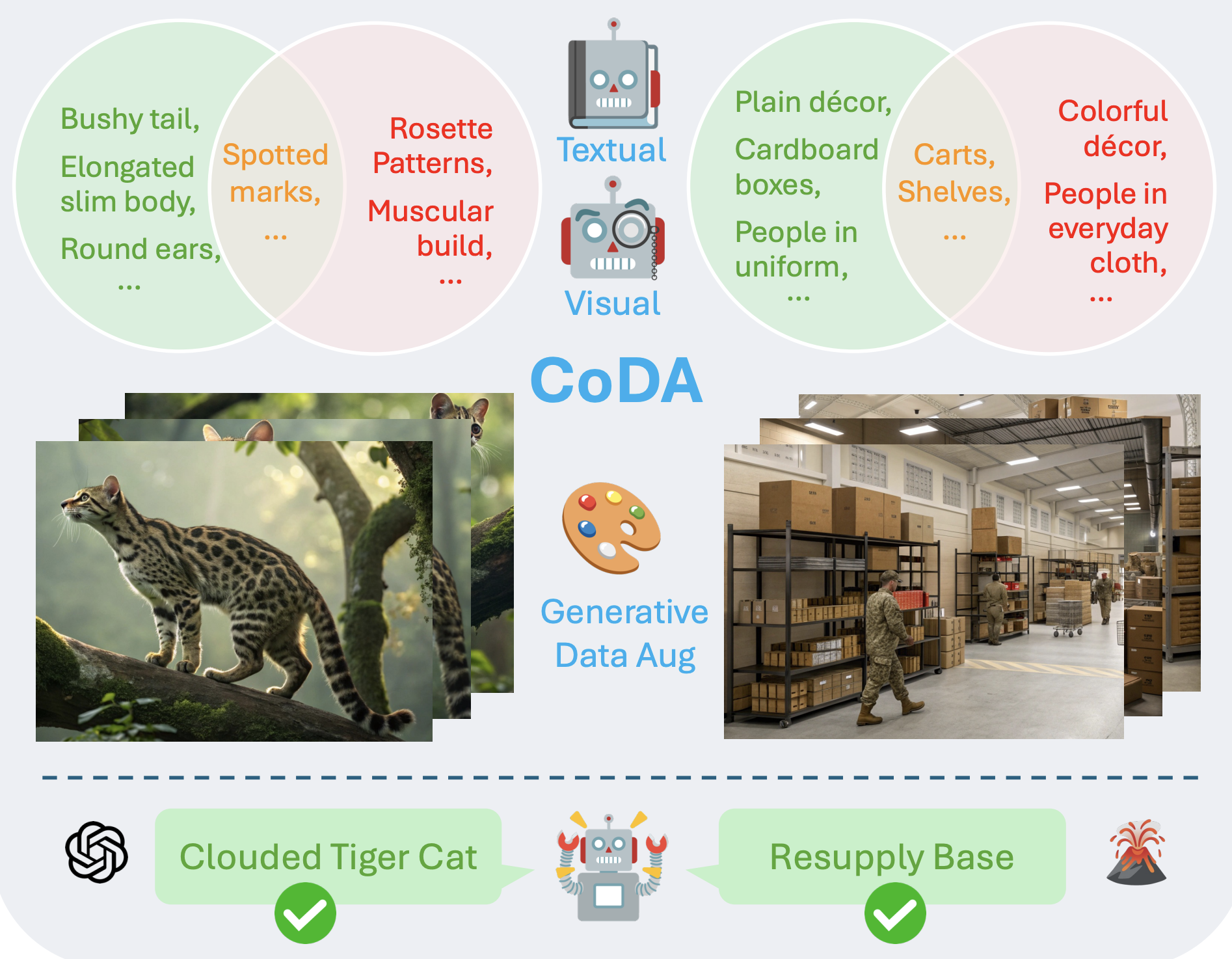

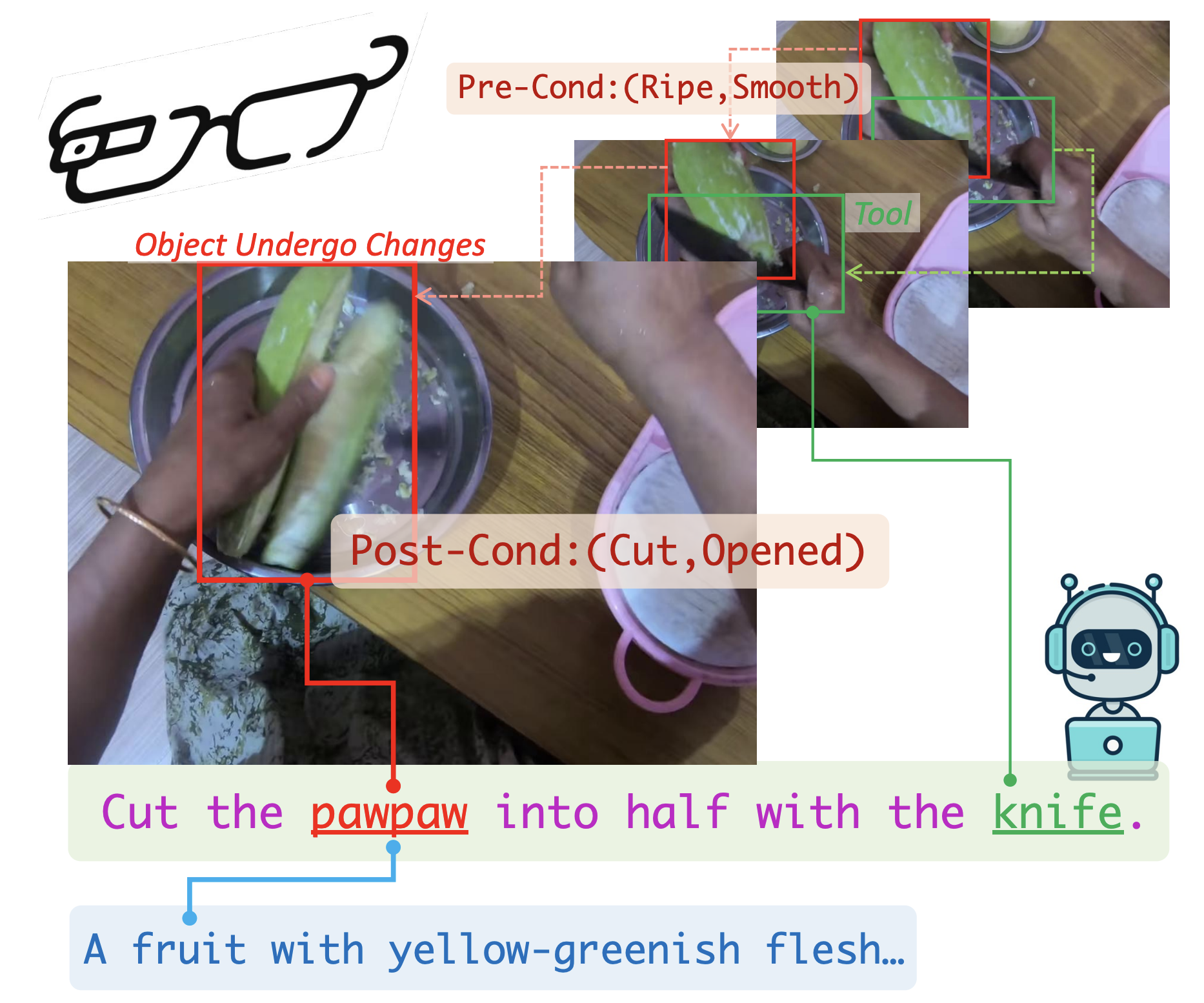

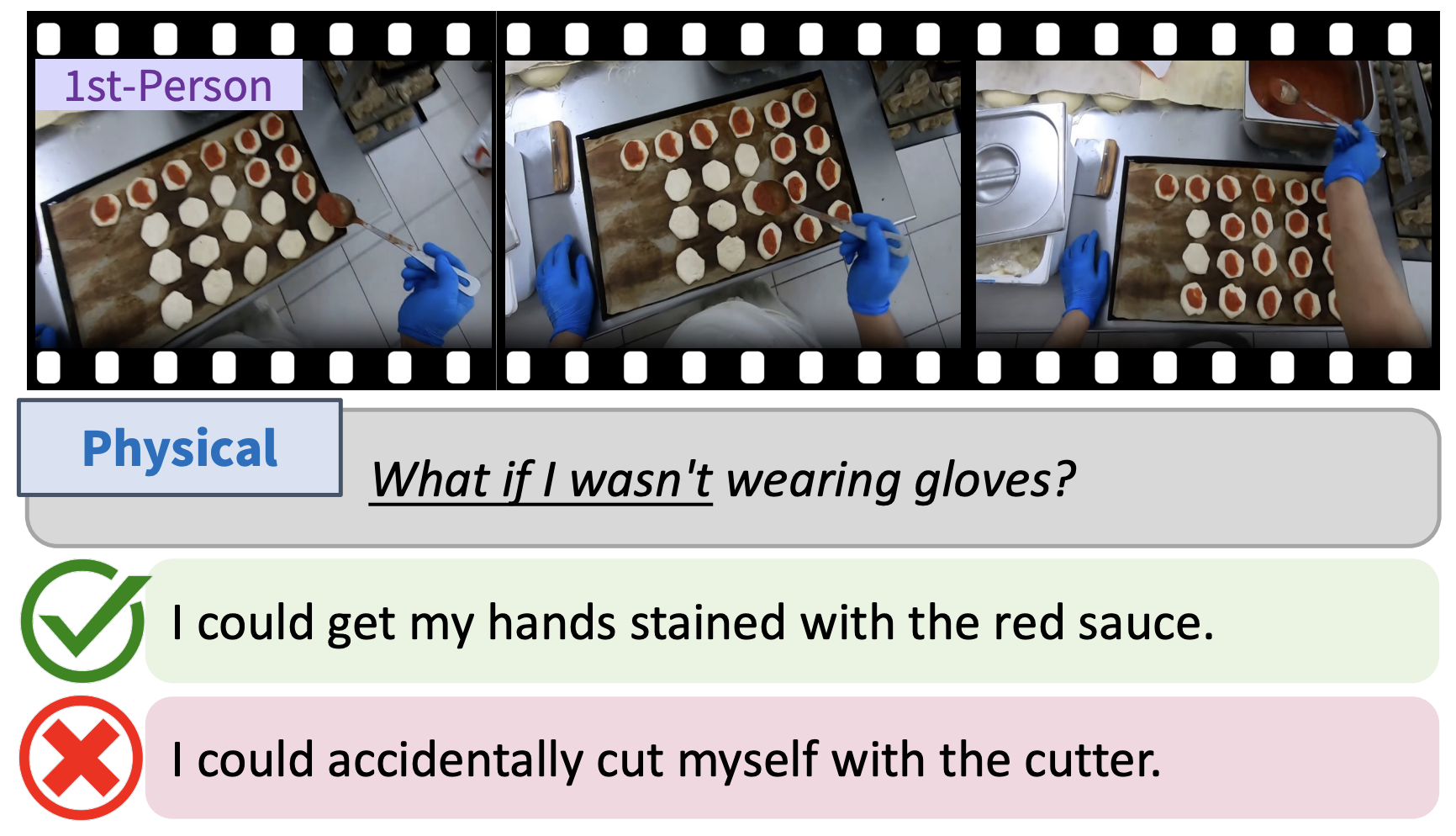

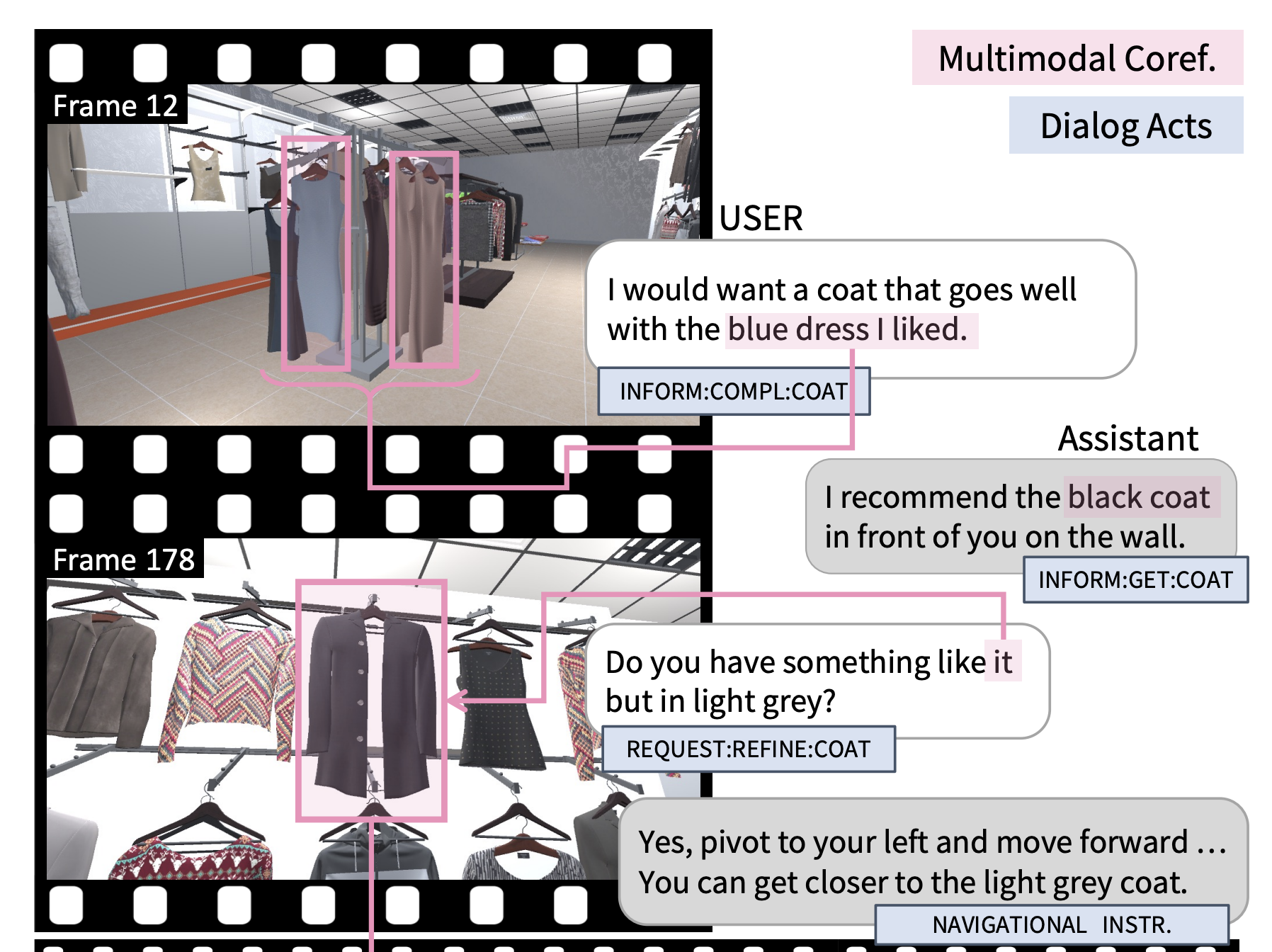

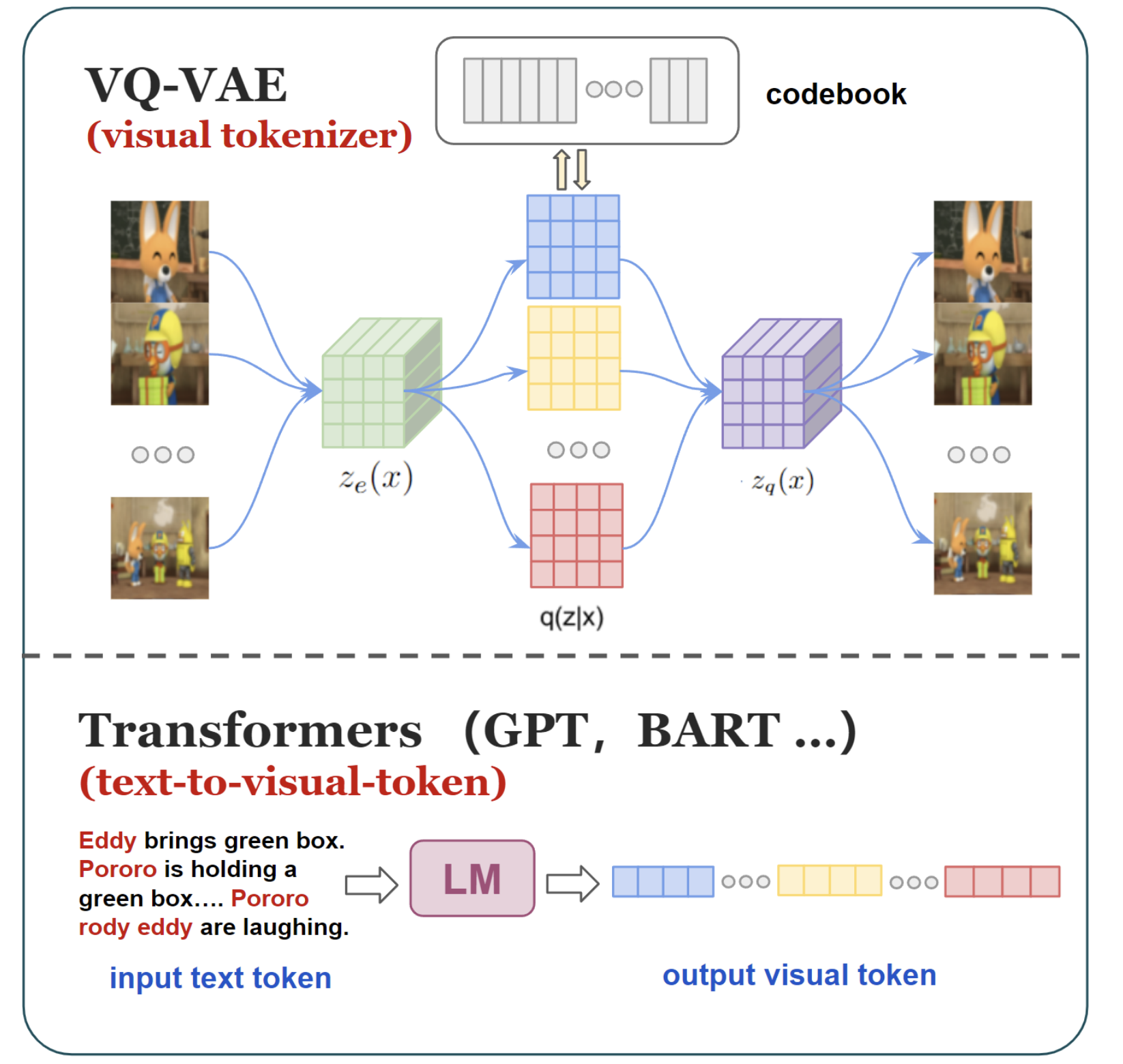

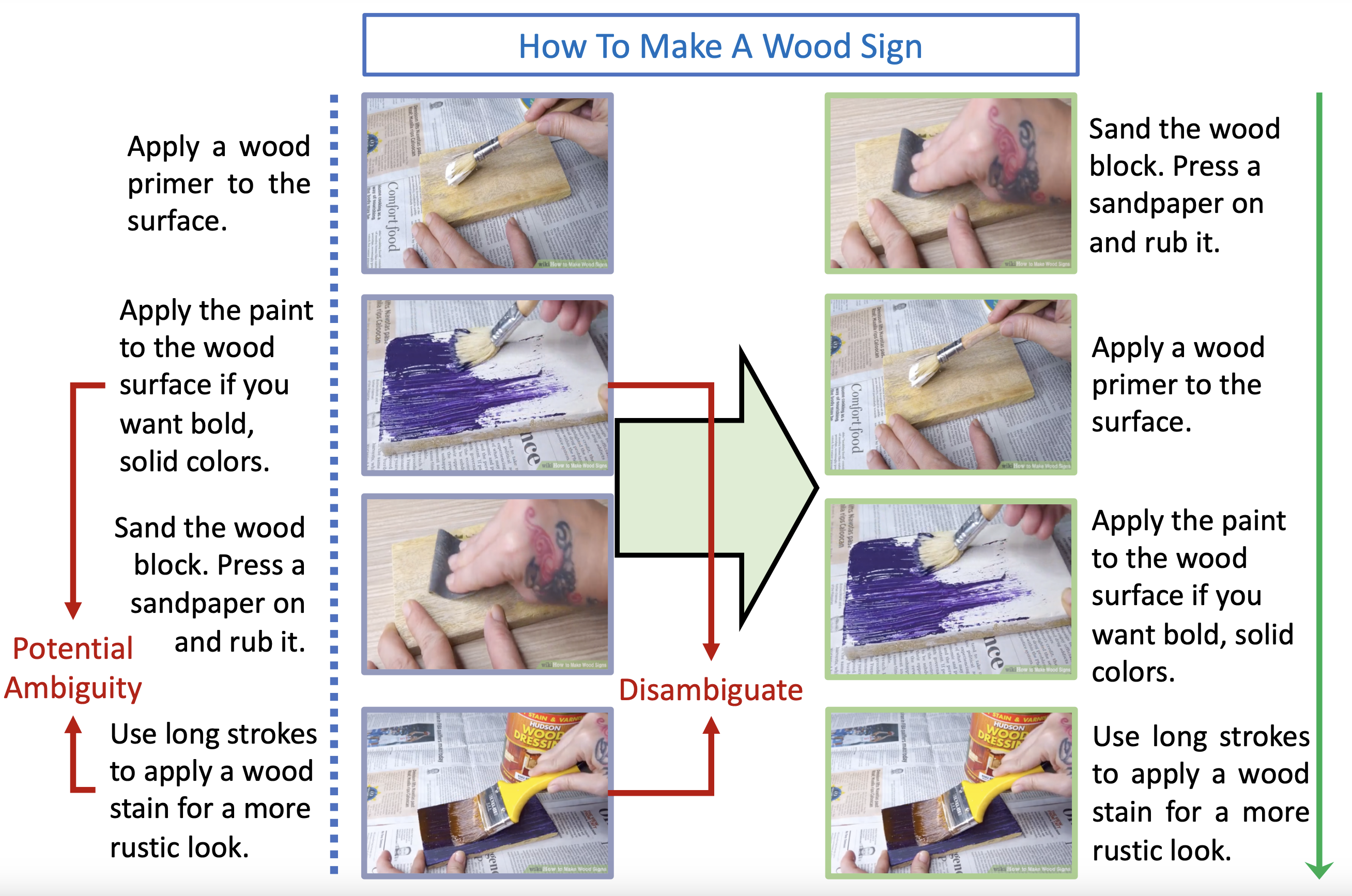

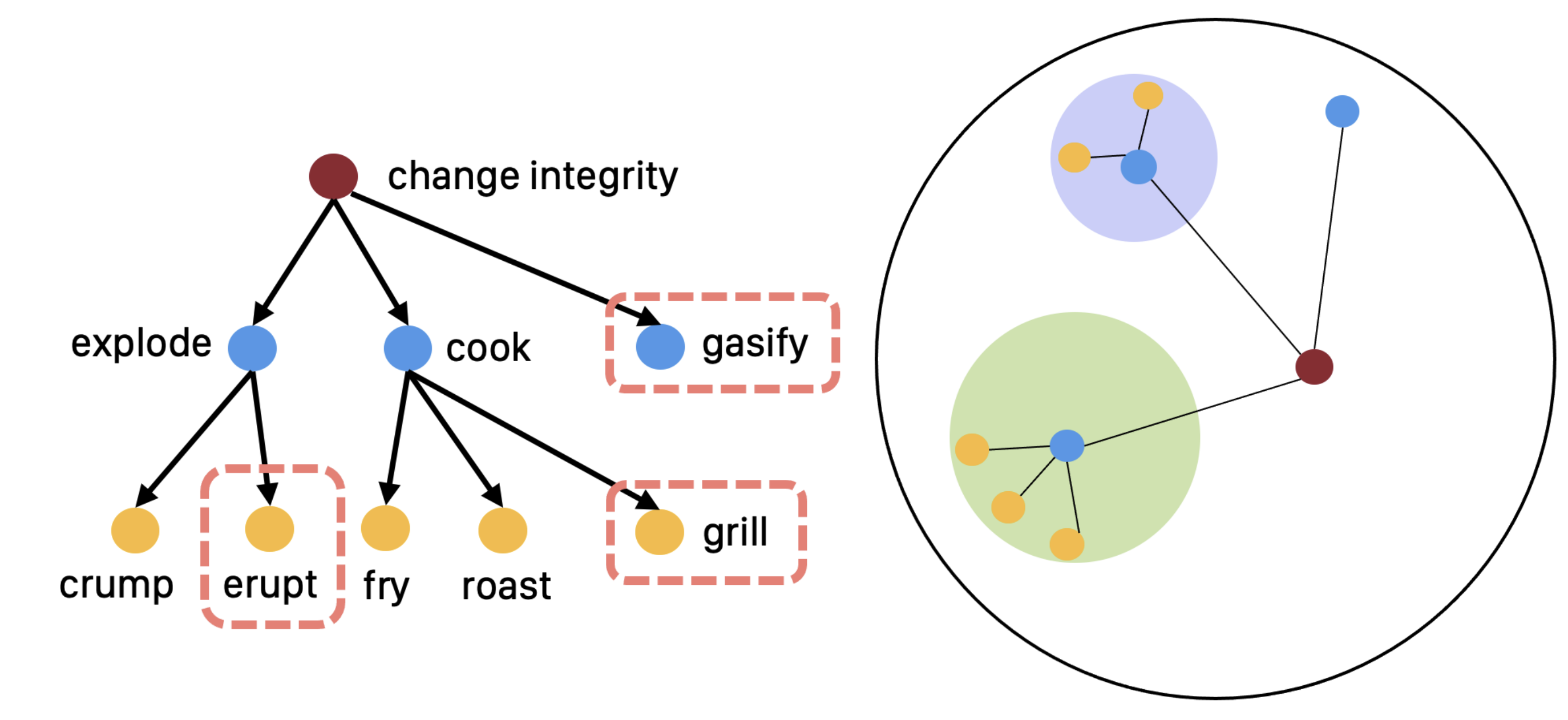

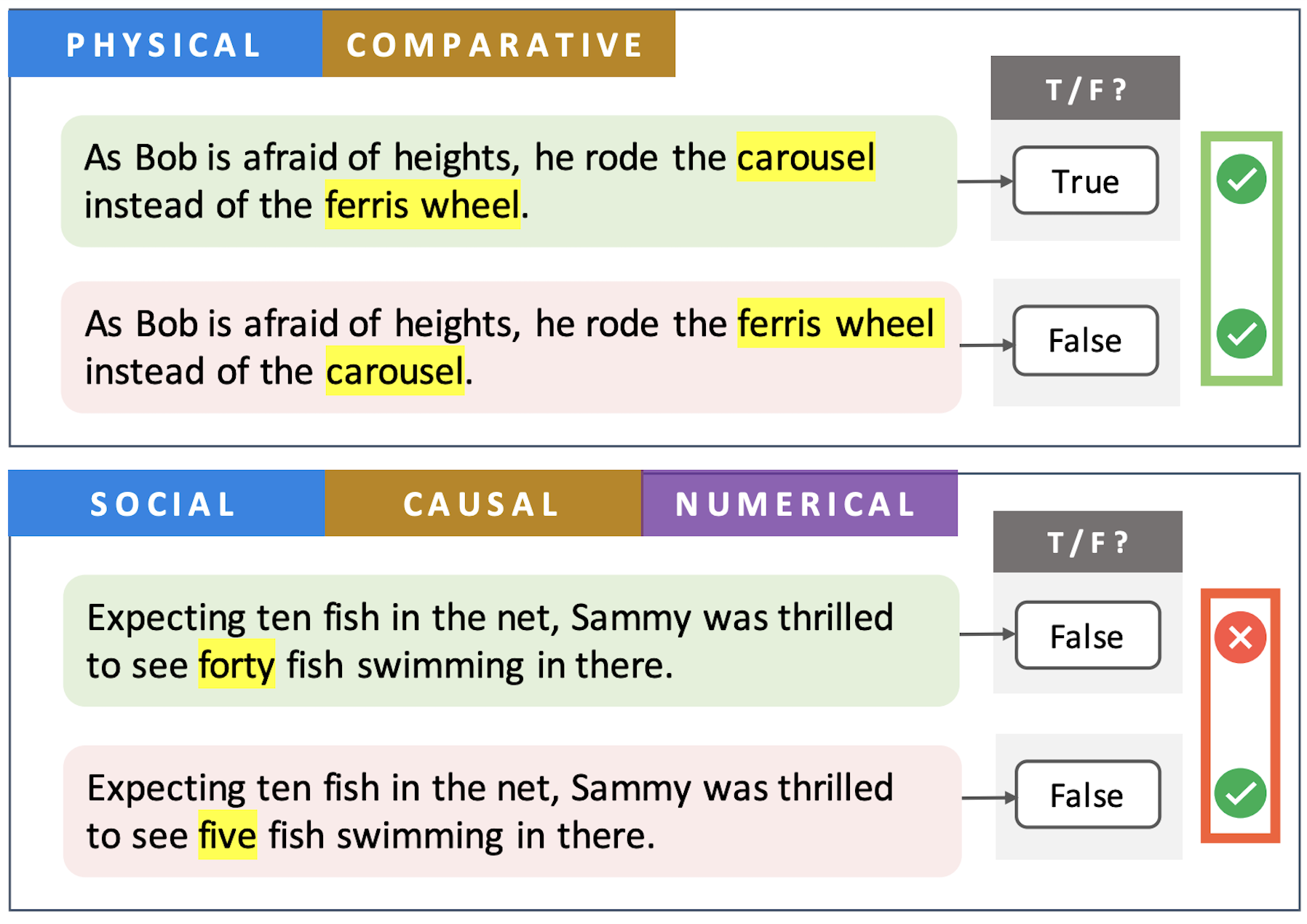

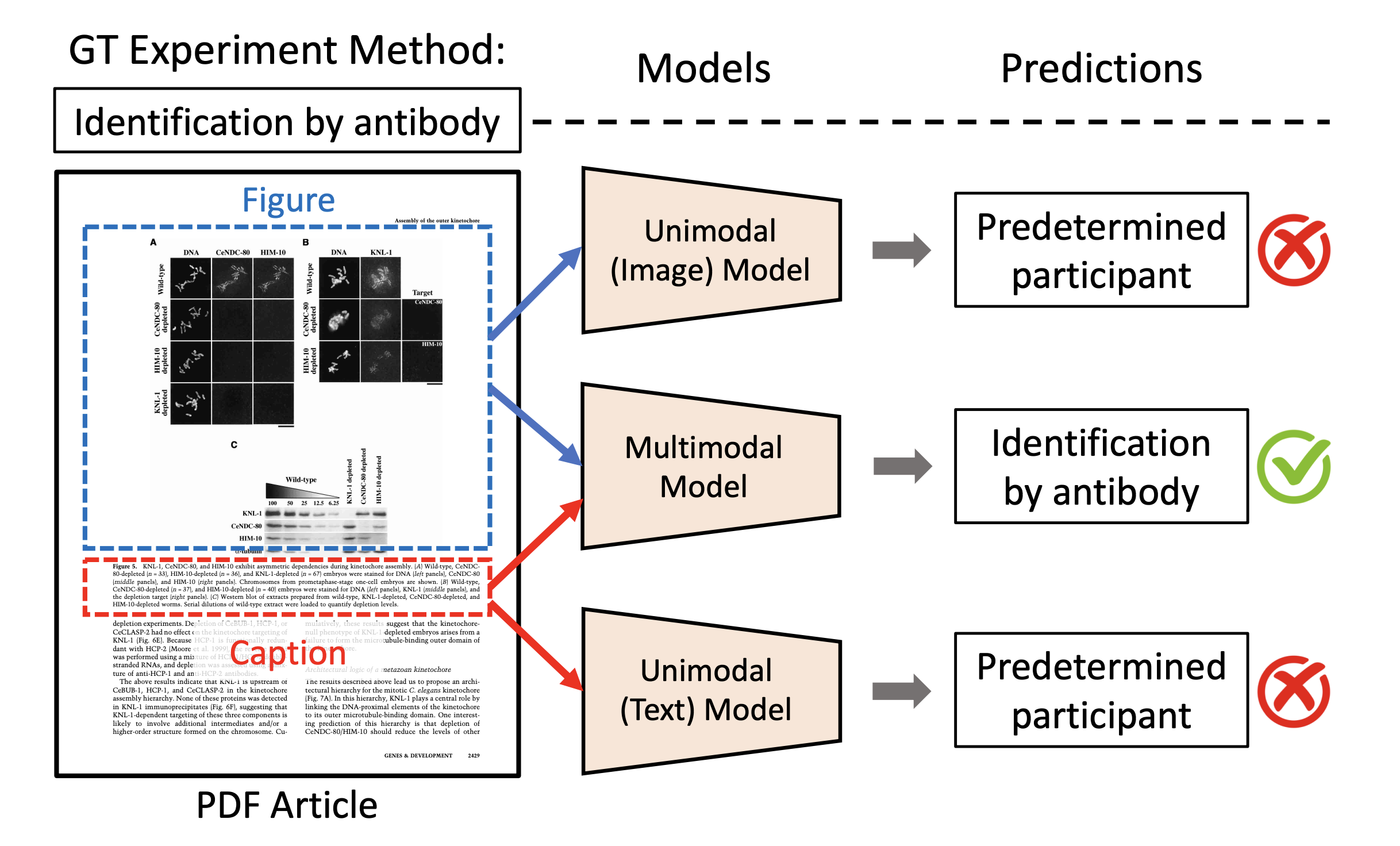

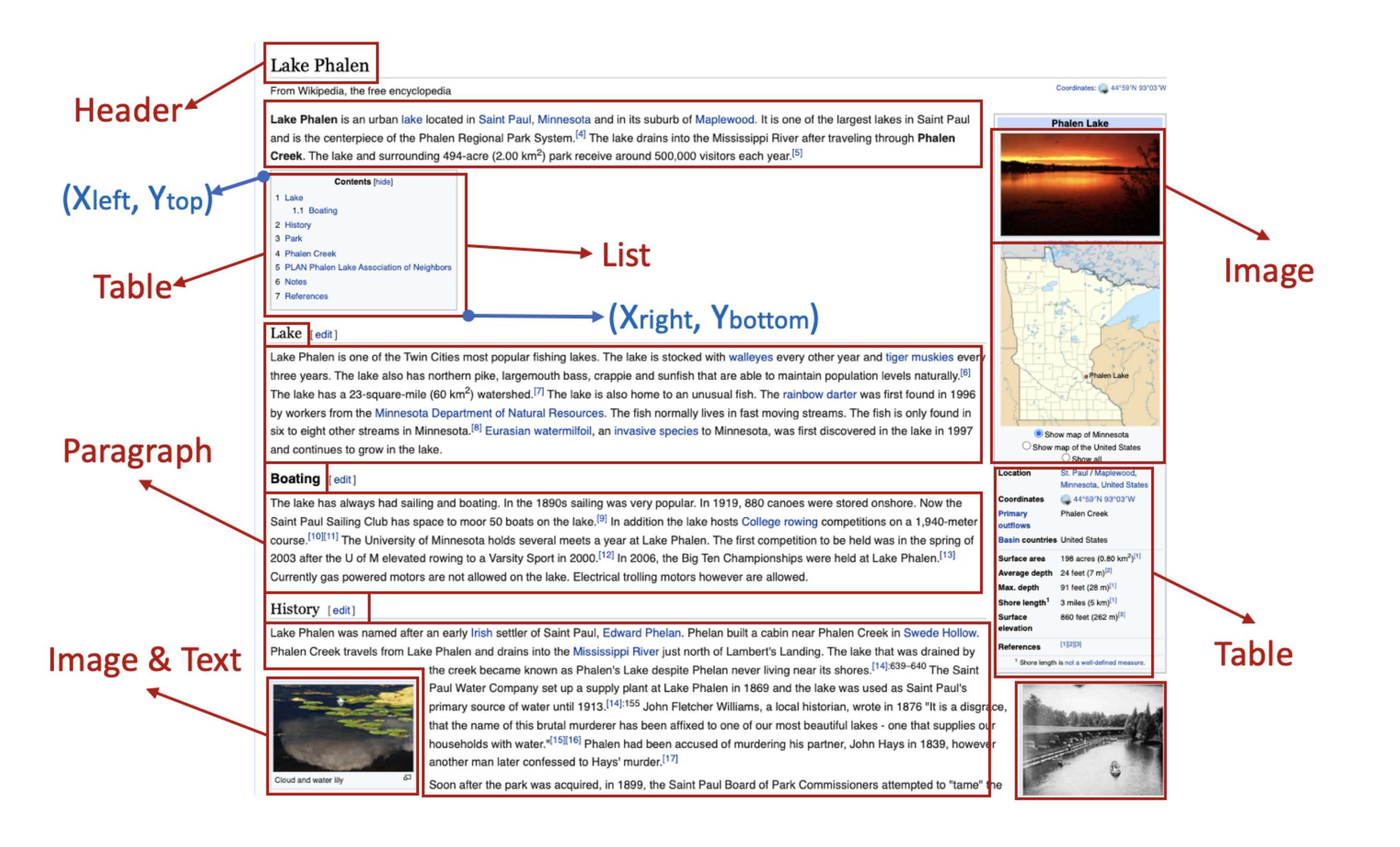

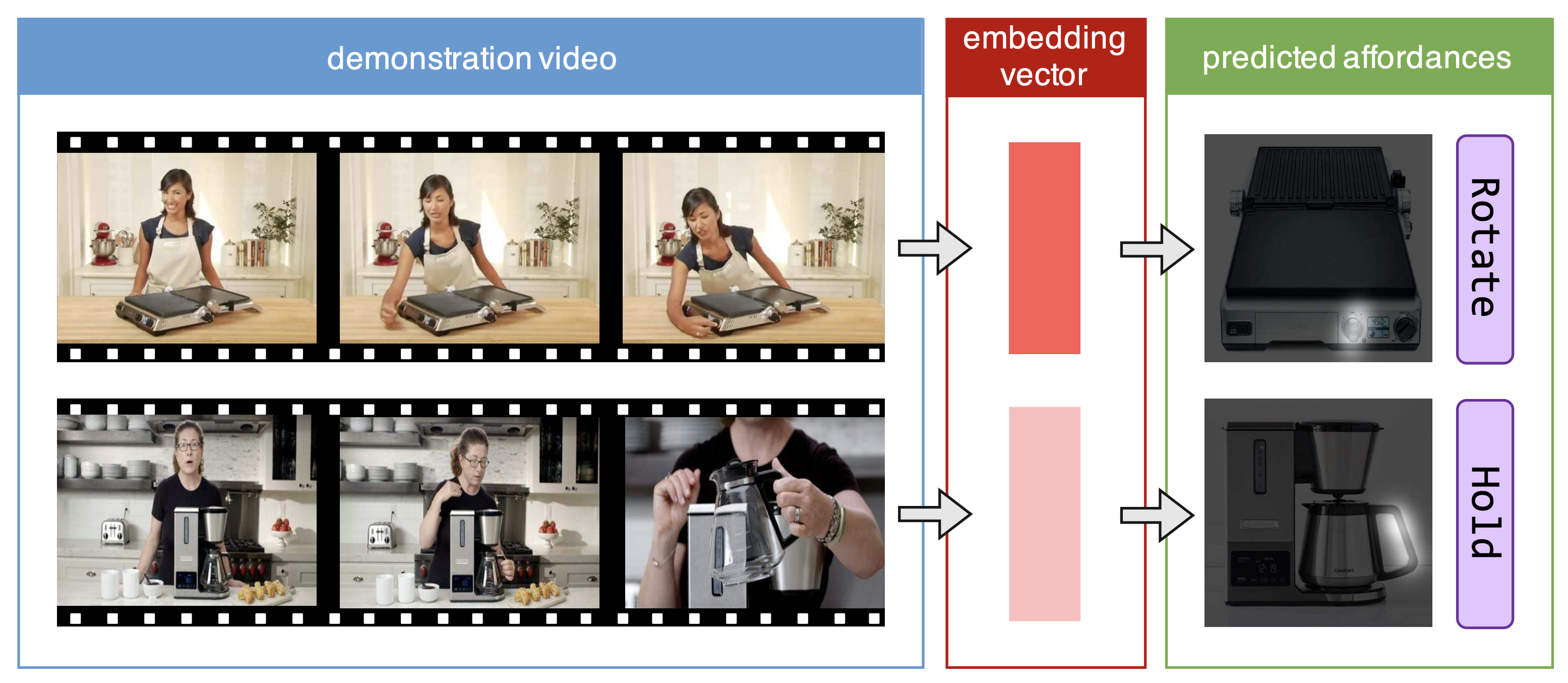

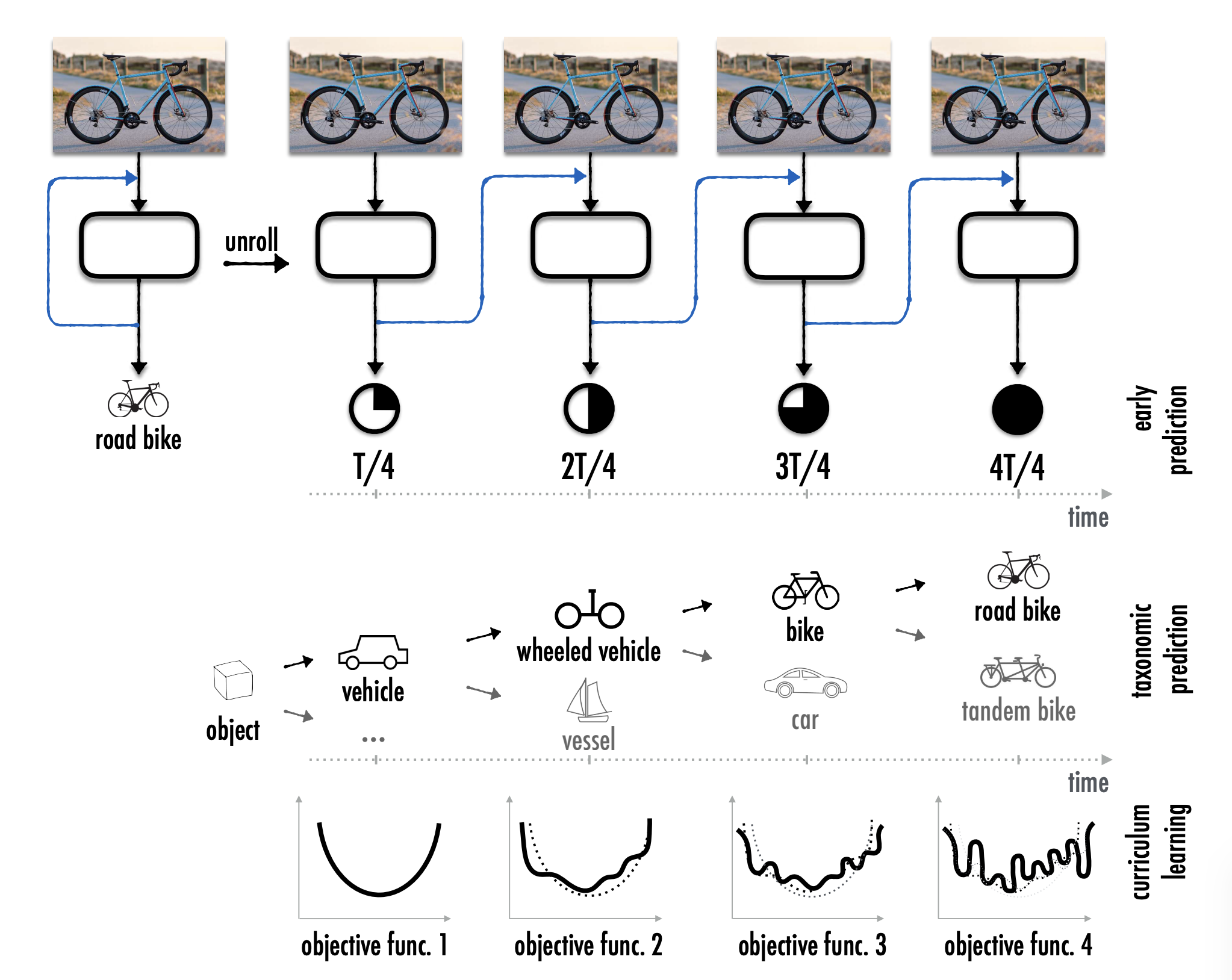

where my research focuses on multimodal models across NLP and computer vision.

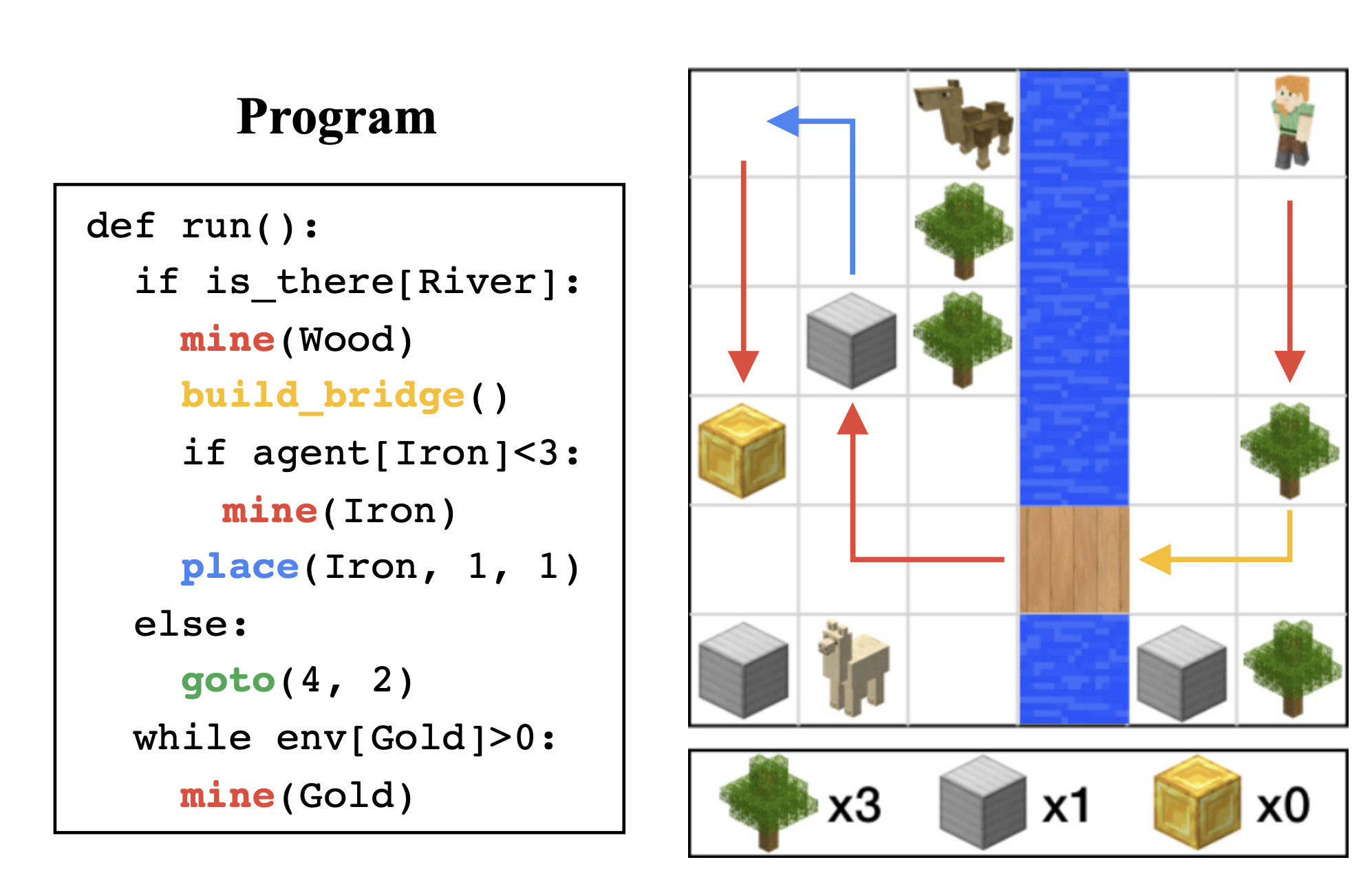

I have also worked with Joseph J. Lim on reinforcement learning and vision for robotics topics.

Prior to PhD, I obtained my M.S. at Stanford University where I was advised by Silvio Savarese,

and I did my undergrad at National Tsing-Hua University (國立清華大學).

Over the summers, I've been lucky to work as research interns in several wonderful groups, including

Google Research, Meta Reality Labs, Amazon AI, and Adobe Research.